Chapter 21 Time Series Models

21.1 Univariate Time Series Modeling

Any metric that is measured over regular time intervals forms a time series.

Analysis of time series is commercially importance because of industrial need and relevance especially w.r.t forecasting (demand,sales, supply etc).

In this chapter we will consider fundamental issues related to time series models like:

- Naive models

- Linear models

- ETS models

- Seasonal and non-seasonal ARIMA models

21.2 Time series CHEAT-SHEET

Just to sum up, before we will continue, please take a look at the most popular functions that can help you handle time series :-)

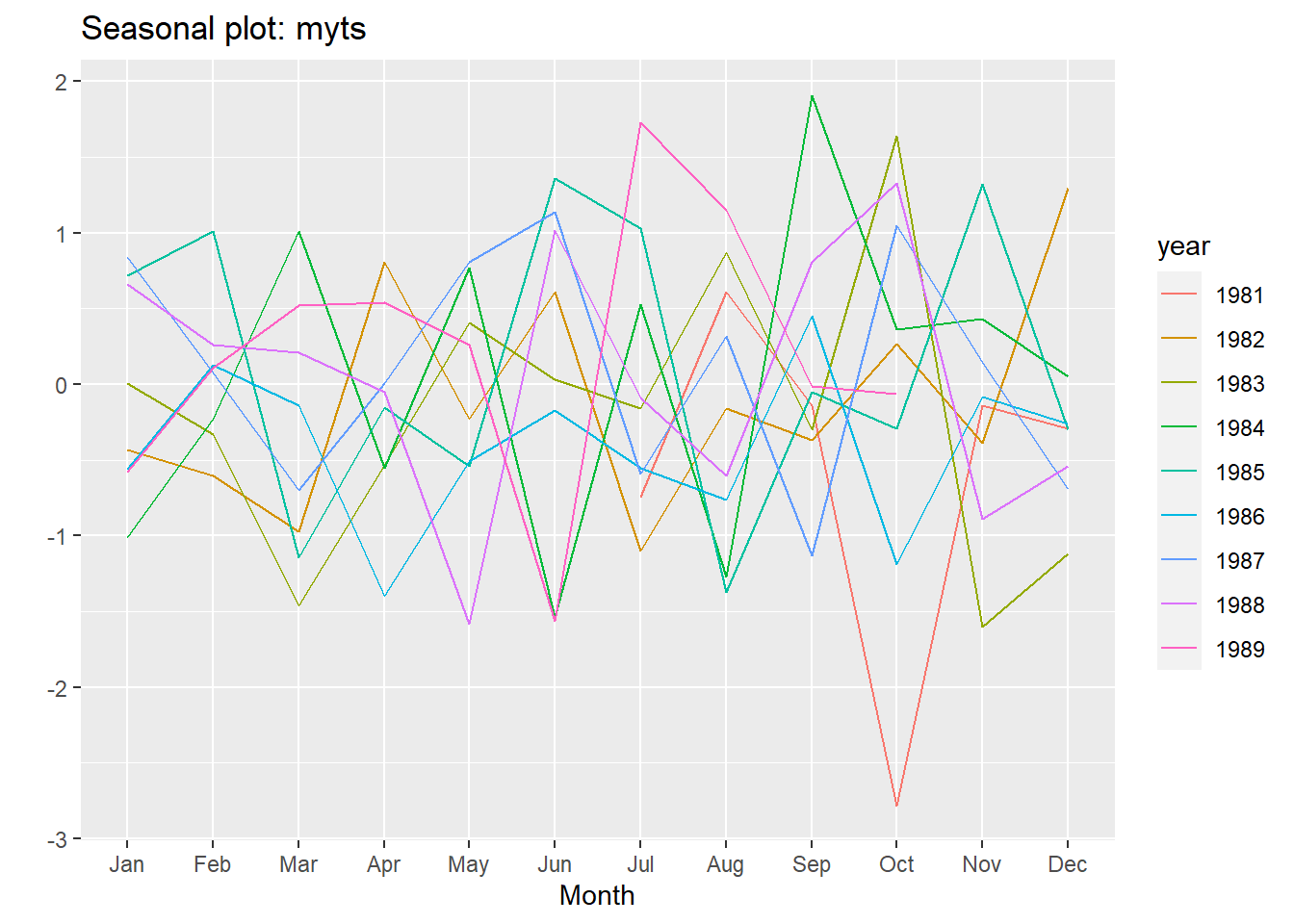

21.2.3 Seasonality

ggseasonplot(myts)# Create a seasonal plot

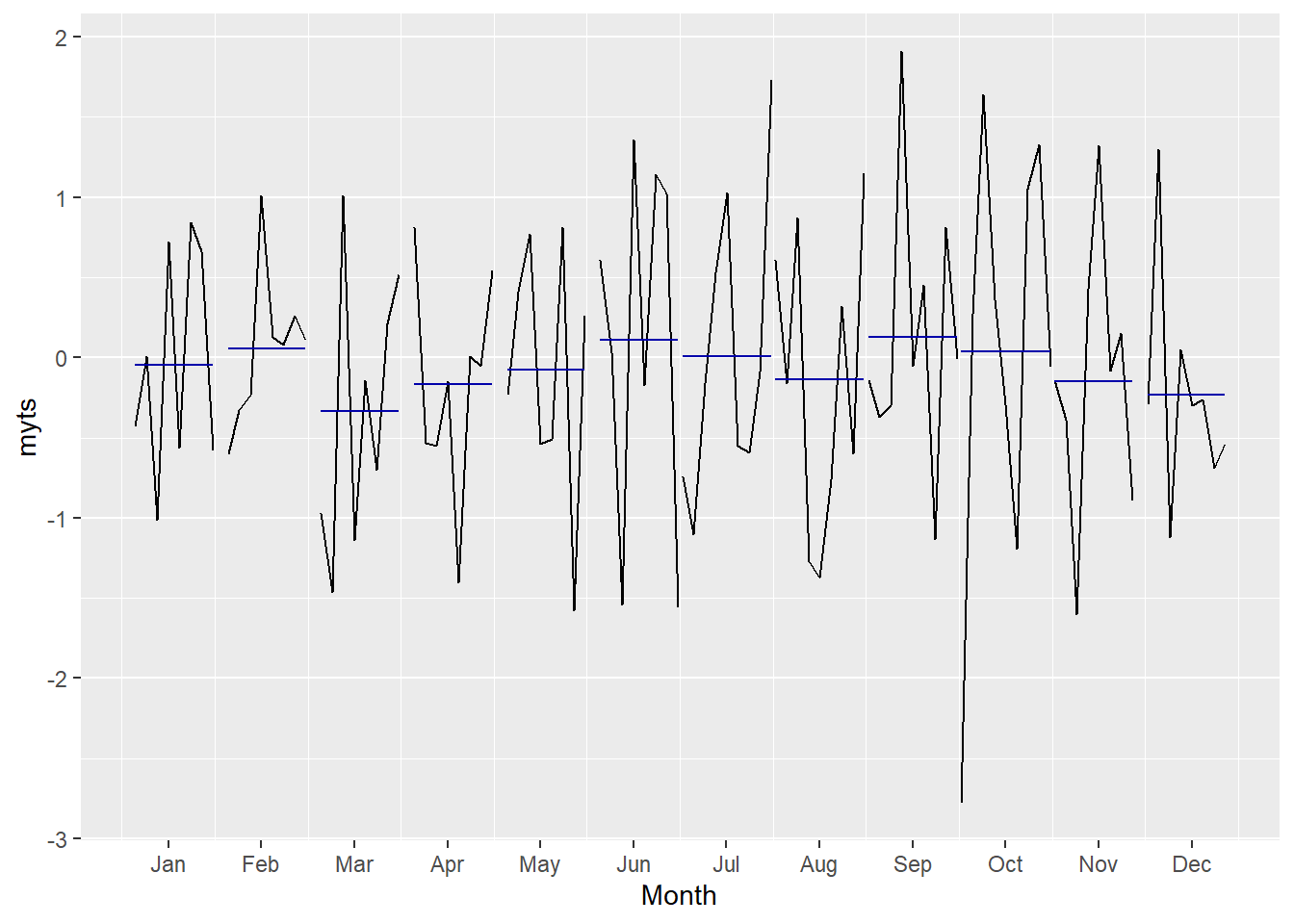

ggsubseriesplot(myts)# Create mini plots for each season and show seasonal means

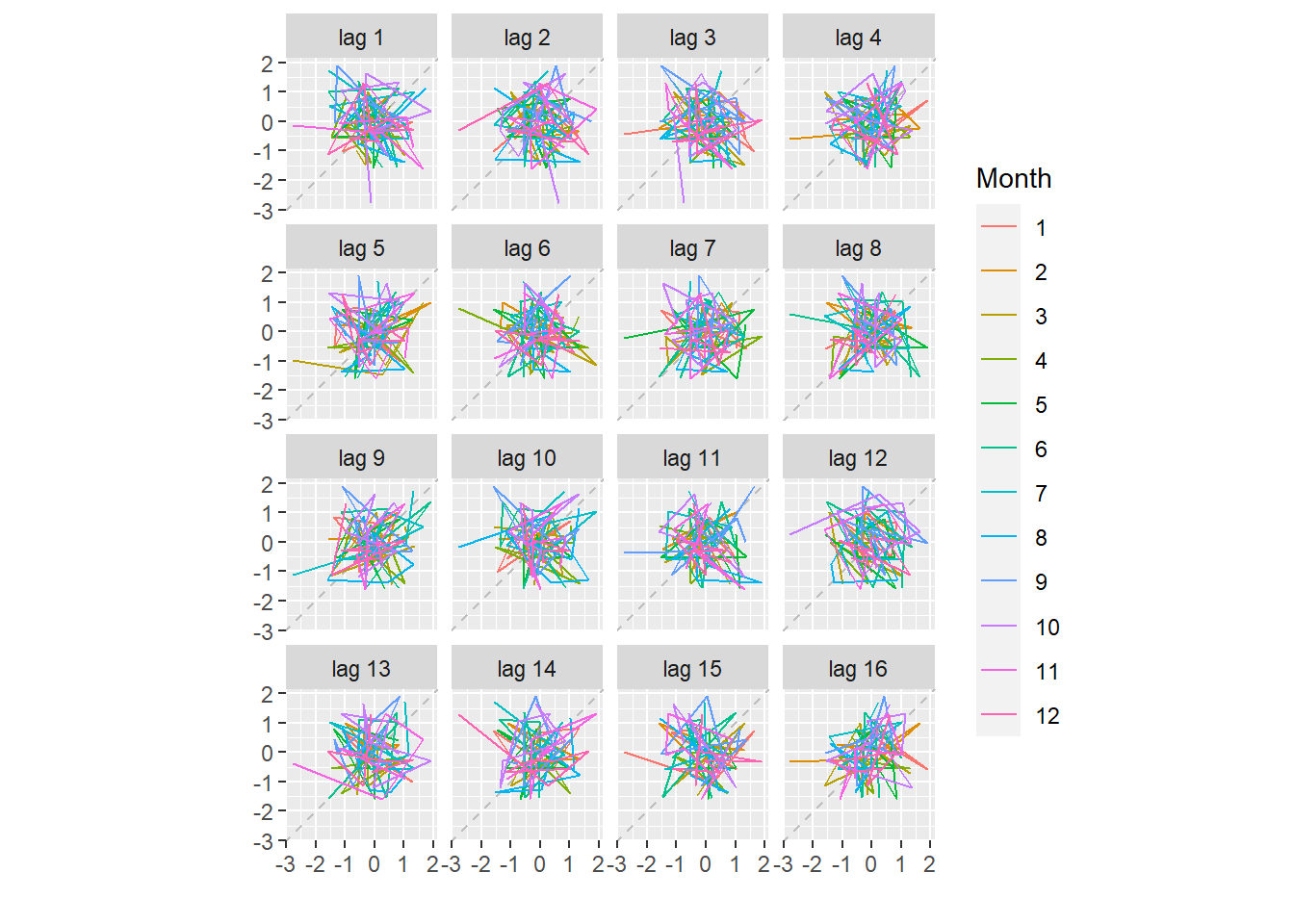

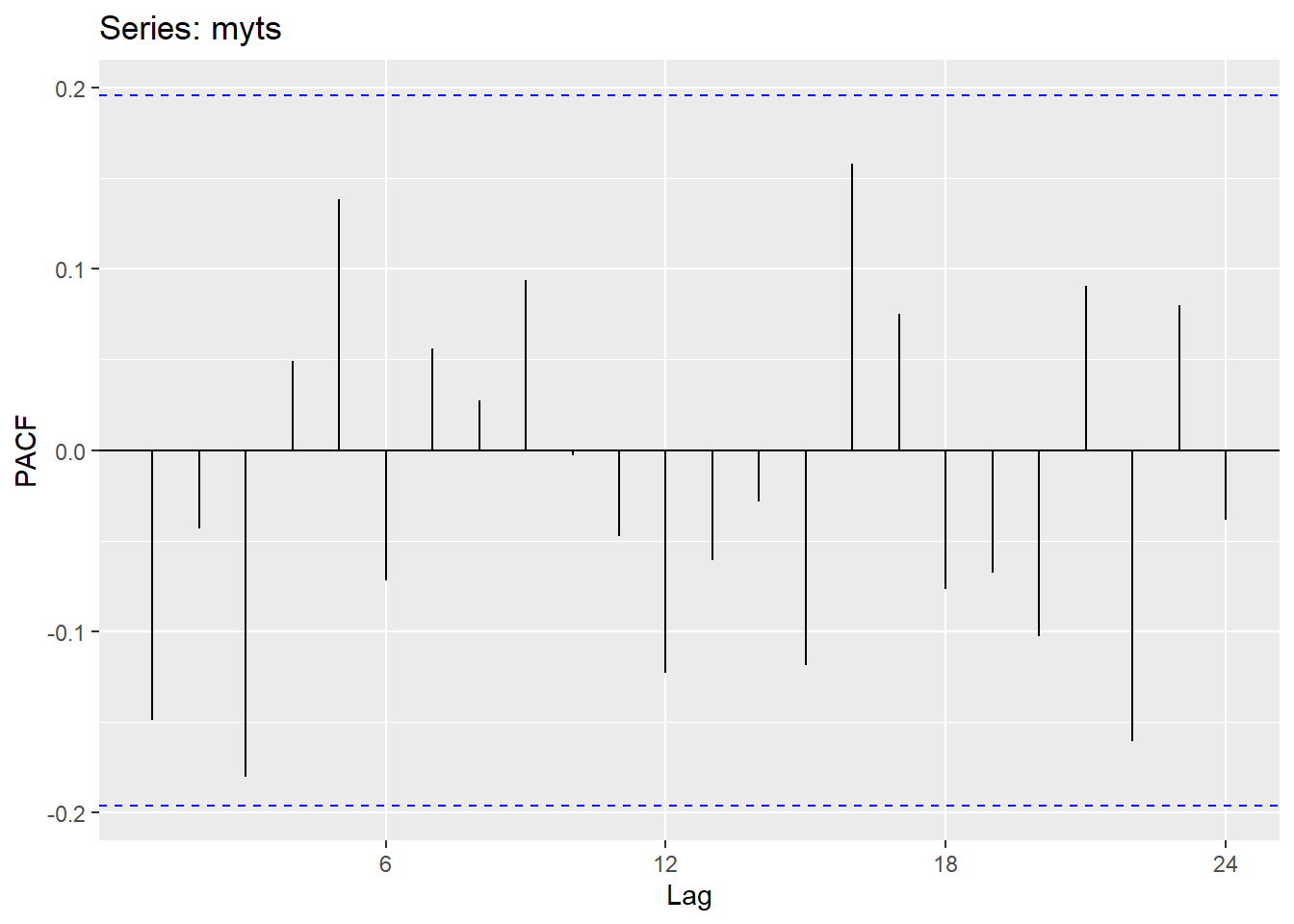

21.2.4 Lags and ACF, PACF

gglagplot(myts)# Plot the time series against lags of itself

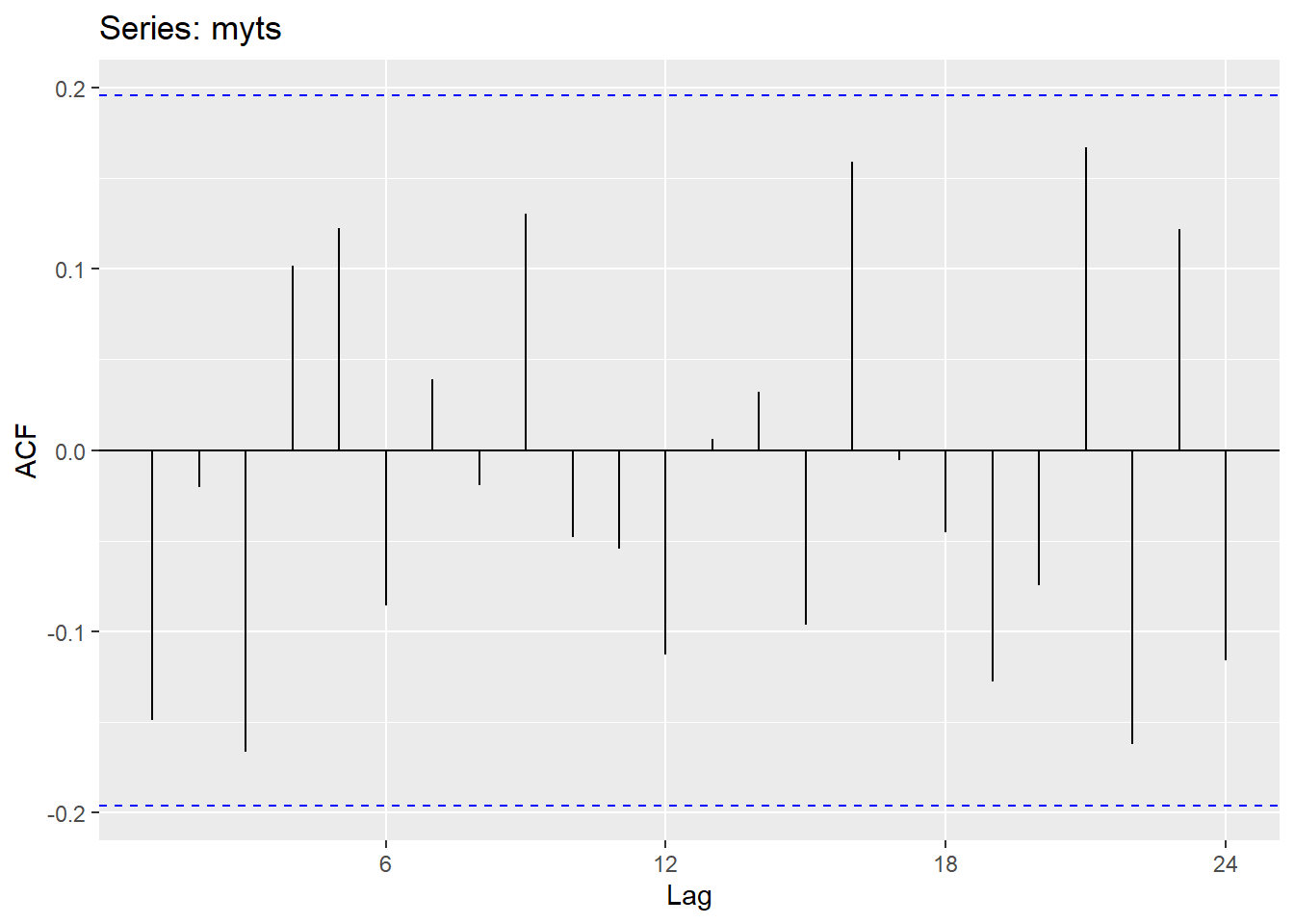

ggAcf(myts)# Plot the autocorrelation function (ACF)

ggPacf(myts)# Plot the partial autocorrelation function (PACF)

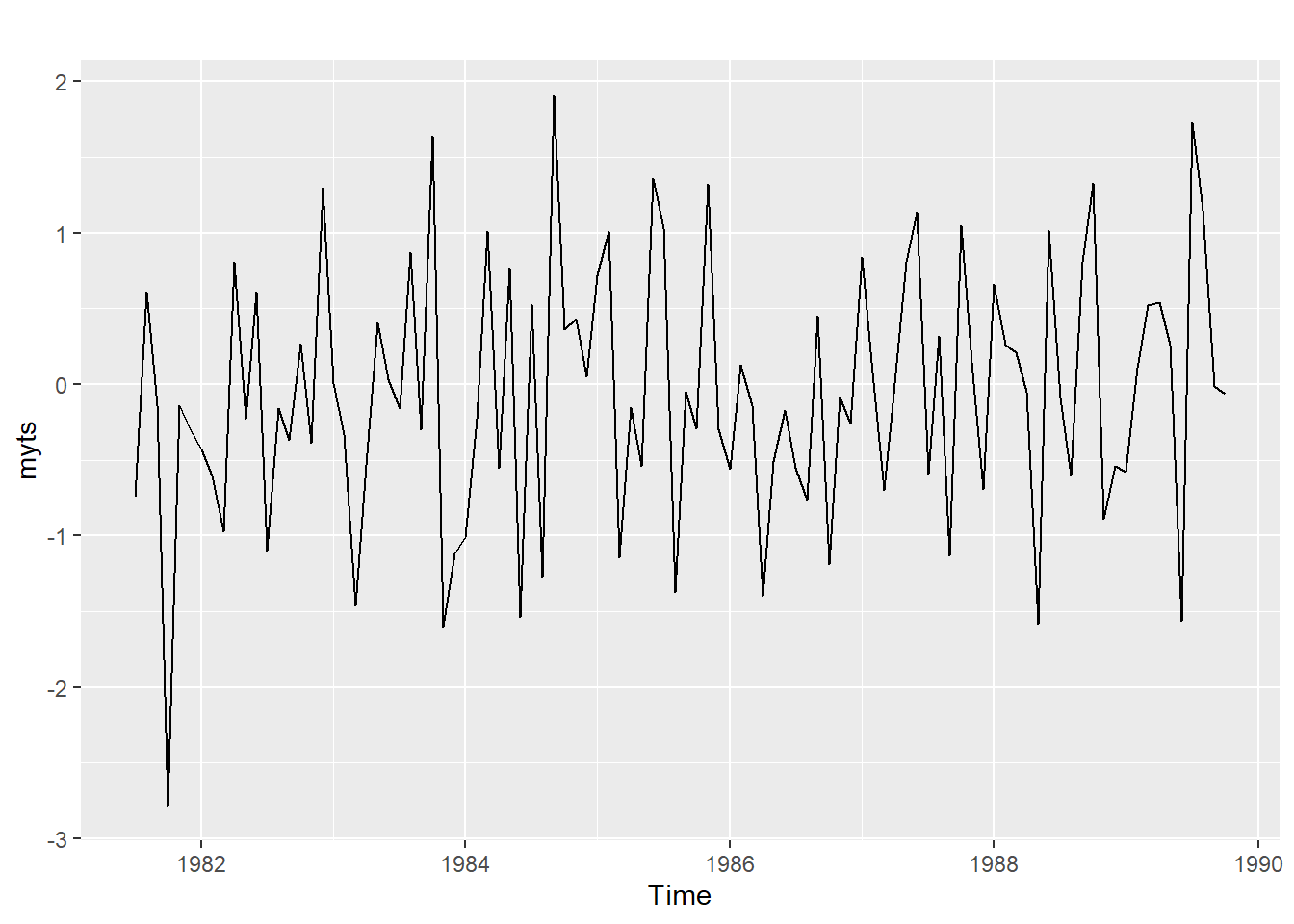

21.2.5 White Noise and the Ljung-Box Test

White Noise is another name for a time series of iid data. Purely random. Ideally your model residuals should look like white noise.

You can use the Ljung-Box test to check if a time series is white noise, here is an example with 24 lags:

Box.test(myts, lag = 24, type="Lj")##

## Box-Ljung test

##

## data: myts

## X-squared = 31, df = 24, p-value = 0.2p-value > 0.05 suggests data are not significantly different than white noise

21.2.6 Model Selection

The forecast package includes a few common models out of the box. Fit the model and create a forecast object, and then use the forecast() function on the object and a number of h periods to predict.

Example of the workflow:

train <- window(data, start = 1980)

fit <- naive(train)

checkresiduals(fit)

pred <- forecast(fit, h=4)

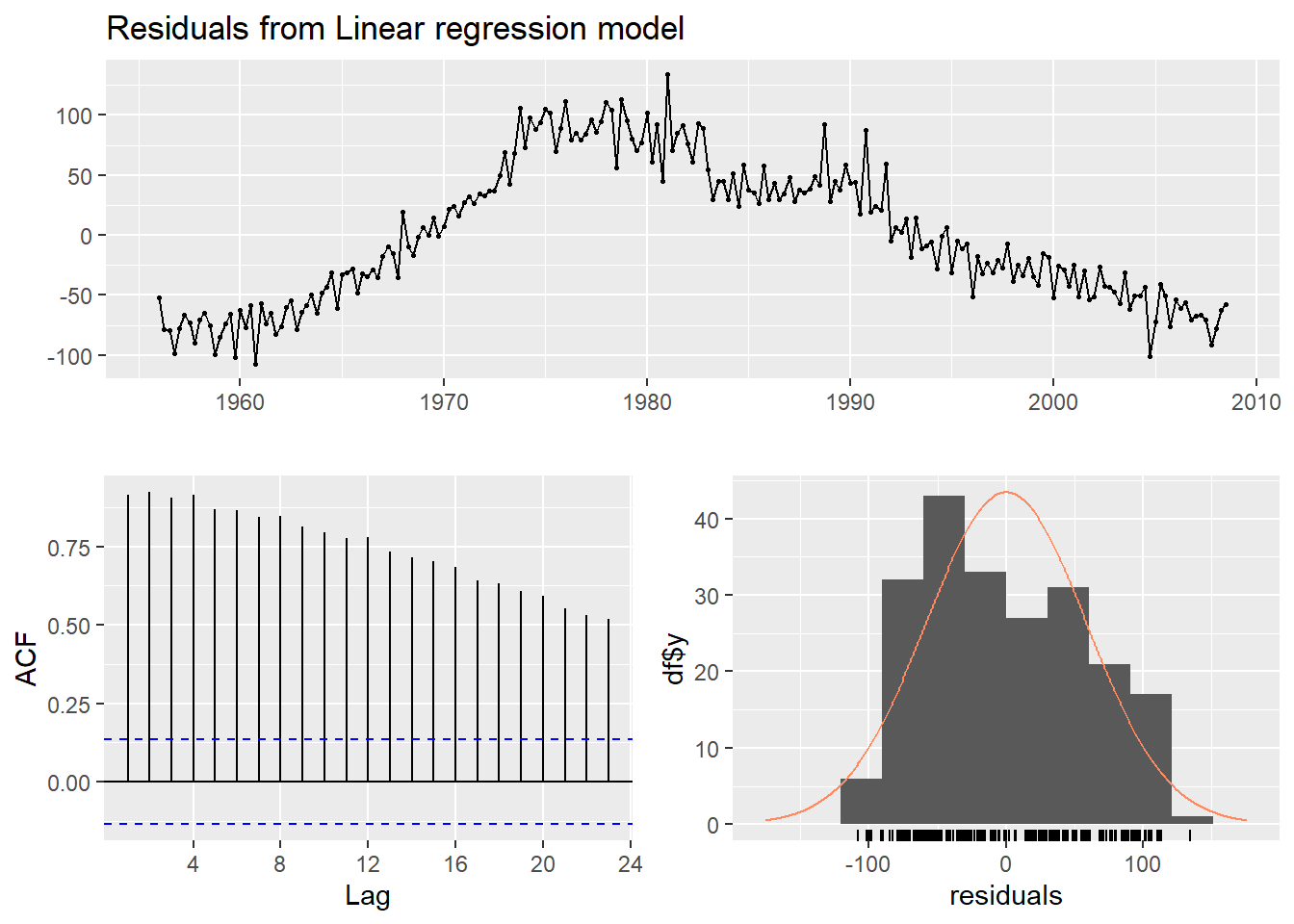

accuracy(pred, data)21.2.8 Residuals

Residuals are the difference between the model`s fitted values and the actual data. Residuals should look like white noise and be:

- Uncorrelated

- Have mean zero

And ideally have:

- Constant variance

- A normal distribution

checkresiduals(): helper function to plot the residuals, plot the ACF and histogram, and do a Ljung-Box test on the residuals.21.2.9 Evaluating Model Accuracy

Train/Test split with window function:

window(data, start, end): to slice the ts dataUse accuracy() on the model and test set:

accuracy(model, testset): Provides accuracy measures like MAE, MSE, MAPE, RMSE etcBacktesting with one step ahead forecasts, aka Time series cross validation can be done with a helper function tsCV().

tsCV(): returns forecast errors given a forecastfunction that returns a forecast object and number of steps ahead h. At h = 1 the forecast errors will just be the model residuals.Here`s an example using the naive() model, forecasting one period ahead:

tsCV(data, forecastfunction = naive, h = 1)21.2.10 Many Models

Exponential Models

- ses(): Simple Exponential Smoothing, implement a smoothing parameter alpha on previous data

- holt(): Holt`s linear trend, SES + trend parameter. Use damped=TRUE for damped trending

- hw(): Holt-Winters method, incorporates linear trend and seasonality. Set seasonal=

additivefor additive version ormultiplicativefor multiplicative version

ETS Models

The forecast package includes a function ets() for your exponential smoothing models. ets() estimates parameters using the likelihood of the data arising from the model, and selects the best model using corrected AIC (AICc) * Error = {A, M} * Trend = {N, A, Ad} * Seasonal = {N, A, M}

ARIMA

Arima(): Implementation of the ARIMA function, set include.constant = TRUE to include drift aka the constant

auto.arima(): Automatic implentation of the ARIMA function in forecast. Estimates parameters using maximum likelihood and does a stepwise search between a subset of all possible models. Can take a lambda argument to fit the model to transformed data and the forecasts will be back-transformed onto the original scale. Turn stepwise = FALSE to consider more models at the expense of more time.

TBATS

Automated model that combines exponential smoothing, Box-Cox transformations, and Fourier terms. Pro: Automated, allows for complex seasonality that changes over time. Cons: Slow.

- T: Trigonometric terms for seasonality

- B: Box-Cox transformations for heterogeneity

- A: ARMA errors for short term dynamics

- T: Trend (possibly damped)

- S: Seasonal (including multiple and non-integer periods)

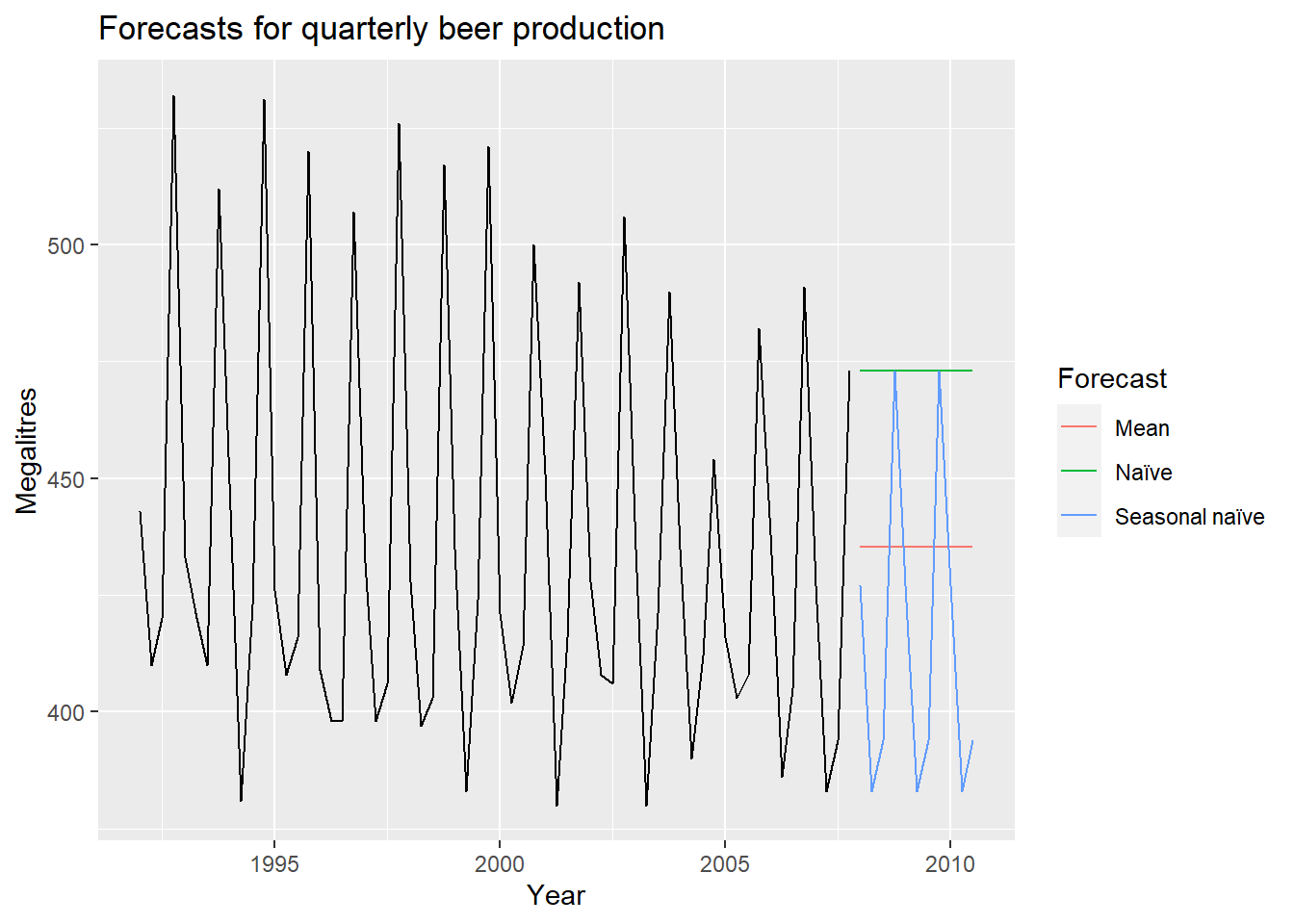

21.3 Naive approach

Using the naive method, all forecasts for the future are equal to the last observed value of the series. Naive forecasts are where all forecasts are simply set to be the value of the last observation. That is, This method works remarkably well for many economic and financial time series.

Using the average method, all future forecasts are equal to a simple average of the observed data. Here, the forecasts of all future values are equal to the mean of the historical data. If we let the historical data be denoted by , then we can write the forecasts as The notation is a short-hand for the estimate of based on the data .

21.3.1 Seasonal naive method

A similar method is useful for highly seasonal data. In this case, we set each forecast to be equal to the last observed value from the same season of the year (e.g., the same month of the previous year). Formally, the forecast for time is written as where the seasonal period, , and denotes the integer part of . That looks more complicated than it really is.

For example, with monthly data, the forecast for all future February values is equal to the last observed February value. With quarterly data, the forecast of all future Q2 values is equal to the last observed Q2 value (where Q2 means the second quarter). Similar rules apply for other months and quarters, and for other seasonal periods.

21.3.2 Drift method

A variation on the naive method is to allow the forecasts to increase or decrease over time, where the amount of change over time (called the “drift”) is set to be the average change seen in the historical data. Thus the forecast for time is given by This is equivalent to drawing a line between the first and last observations, and extrapolating it into the future.

21.4 Linear models

21.4.1 Multiple regression

- is the variable we want to predict: the response variable

- Each is numerical and is called a predictor. They are usually assumed to be known for all past and future times.

- The coefficients measure the effect of each predictor after taking account of the effect of all other predictors in the model. That is, the coefficients measure the marginal effects.

- is a white noise error term

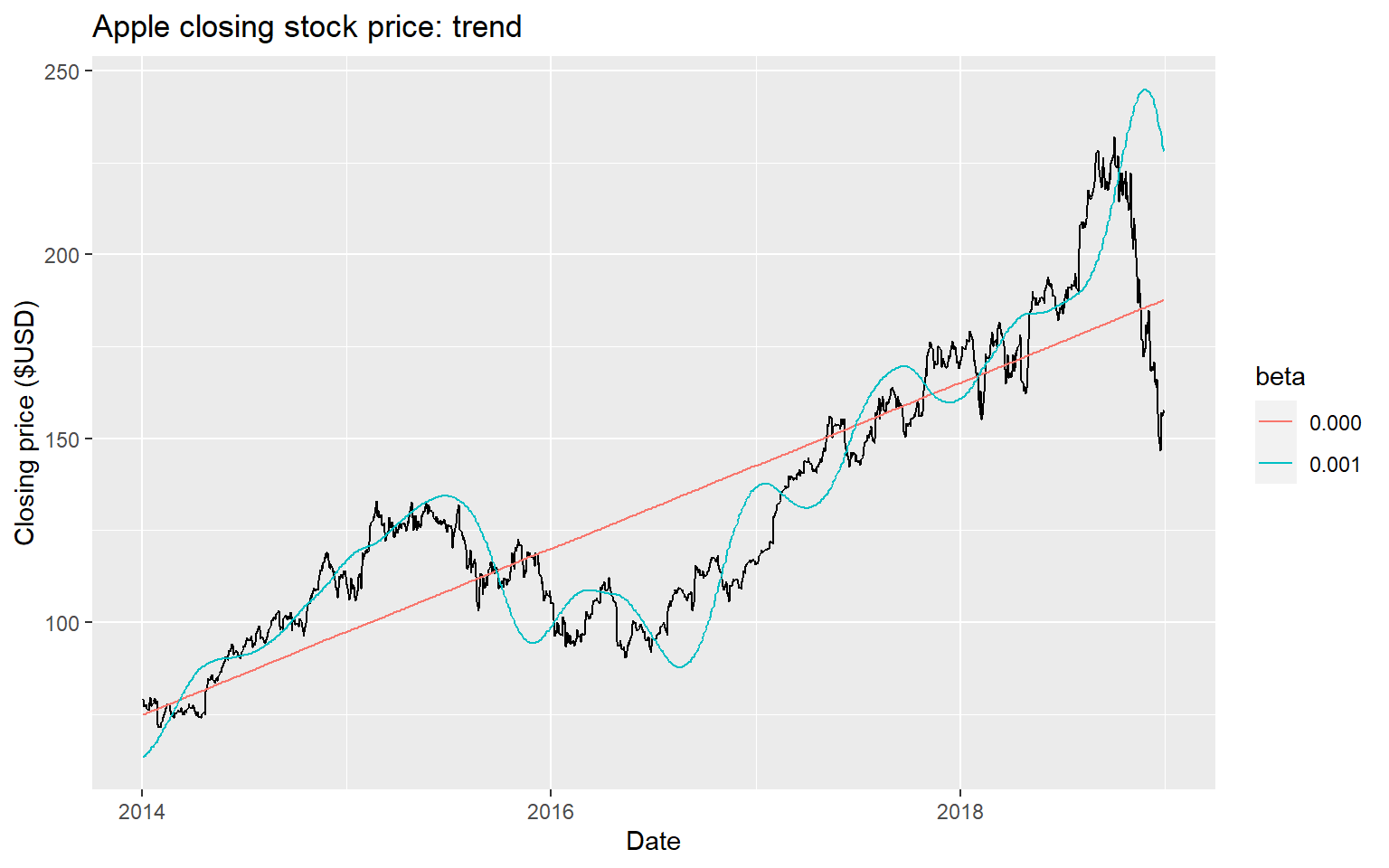

21.4.3 Trend

Linear trend

- Strong assumption that trend will continue.

Seasonal dummies

- For quarterly data: use 3 dummies

- For monthly data: use 11 dummies

- For daily data: use 6 dummies

- What to do with weekly data?

Outliers

- If there is an outlier, you can use a dummy variable (taking value 1 for that observation and 0 elsewhere) to remove its effect.

Public holidays

- For daily data: if it is a public holiday, dummy=1, otherwise dummy=0.

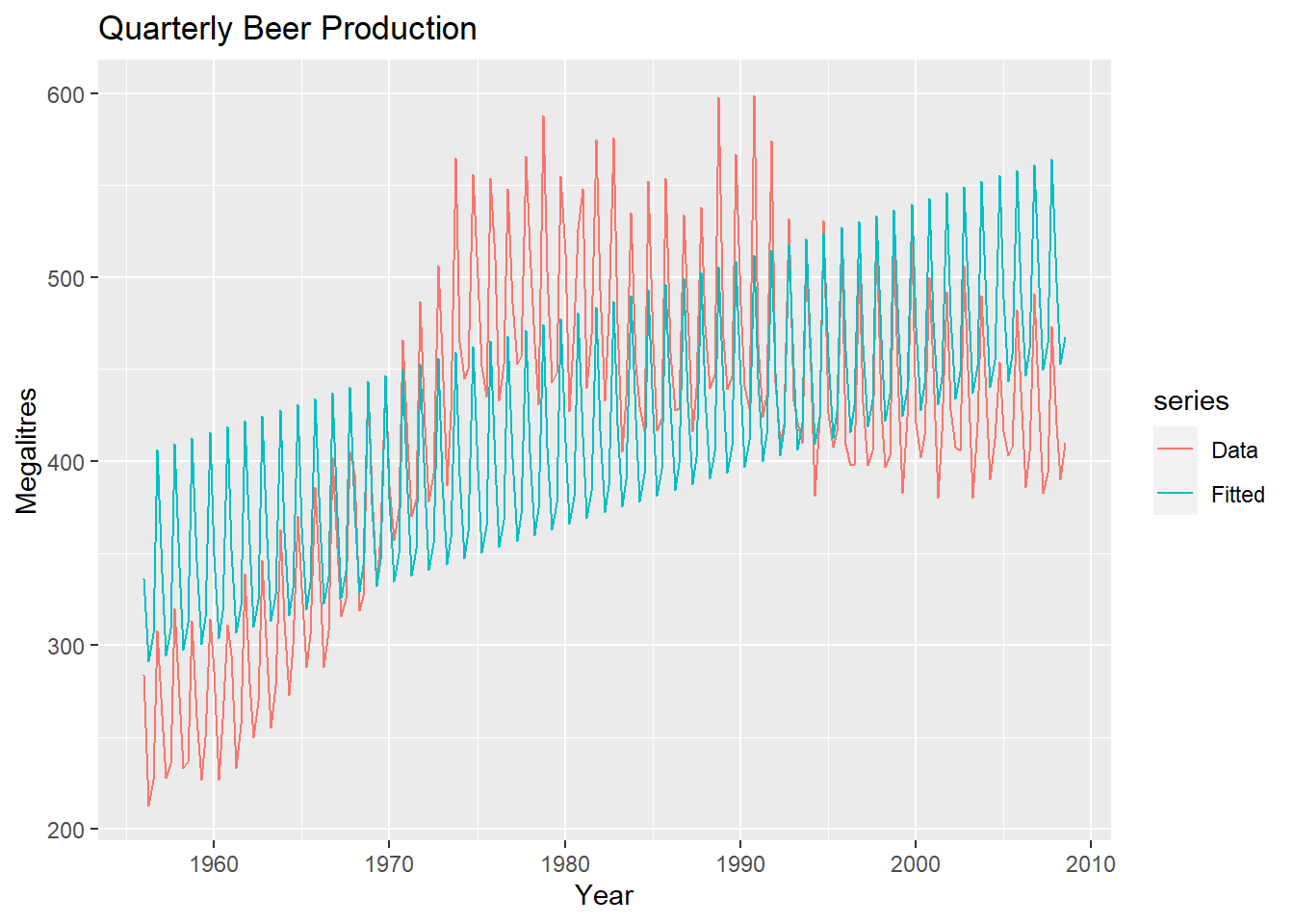

21.4.4 Beer production - example

Regression model:

- if is quarter and 0 otherwise.

- The first quarter variable has been omitted, so the coefficients associated with the other quarters are measures of the difference between those quarters and the first quarter.

fit.beer <- tslm(fpp::ausbeer ~ trend + season)

summary(fit.beer)##

## Call:

## tslm(formula = fpp::ausbeer ~ trend + season)

##

## Residuals:

## Min 1Q Median 3Q Max

## -107.66 -50.64 -9.02 44.54 134.20

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 335.4871 10.7323 31.26 < 2e-16 ***

## trend 0.7754 0.0668 11.60 < 2e-16 ***

## season2 -45.5679 11.4848 -3.97 0.0001 ***

## season3 -31.6829 11.4854 -2.76 0.0063 **

## season4 67.6651 11.5399 5.86 1.8e-08 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 59.1 on 206 degrees of freedom

## Multiple R-squared: 0.547, Adjusted R-squared: 0.538

## F-statistic: 62.1 on 4 and 206 DF, p-value: <2e-16There is an average downward trend of -0.34 megalitres per quarter. On average, the second quarter has production of 34.7 megalitres lower than the first quarter, the third quarter has production of 17.8 megalitres lower than the first quarter, and the fourth quarter has production of 72.8 megalitres higher than the first quarter.

autoplot(fpp::ausbeer, series="Data") +

autolayer(fitted(fit.beer), series="Fitted") +

xlab("Year") + ylab("Megalitres") +

ggtitle("Quarterly Beer Production")

Now we can run the diagnostics (OLS!):

checkresiduals(fit.beer, test=FALSE)

21.5 ETS models

21.5.1 Historical perspective

- Developed in the 1950s and 1960s as methods (algorithms) to produce point forecasts.

- Combine a level, trend (slope) and seasonal component to describe a time series.

- The rate of change of the components are controlled by smoothing parameters: , and respectively.

- Need to choose best values for the smoothing parameters (and initial states).

- Equivalent ETS state space models developed in the 1990s and 2000s.

21.5.2 Simple method

- Simple exponential smoothing is suitable for forecasting data with no clear trend or seasonal pattern.

- Exponential smoothing was proposed in the late 1950s(Brown, 1959; Holt, 1957; Winters, 1960), and has motivated some of the most successful forecasting methods.

- Forecasts produced using exponential smoothing methods are weighted averages of past observations, with the weights decaying exponentially as the observations get older.

- In other words, the more recent the observation the higher the associated weight:

- Want something in between that weights most recent data more highly.

- Simple exponential smoothing uses a weighted moving average with weights that decrease exponentially!

21.5.3 Optimisation

- The application of every exponential smoothing method requires the smoothing parameters and the initial values to be chosen.

- A more reliable and objective way to obtain values for the unknown parameters is to estimate them from the observed data.

- The unknown parameters and the initial values for any exponential smoothing method can be estimated by minimising the SSE.

21.5.4 ETS models with trend

The Holt`s exponential smoothing approach can fit a time series that has an overall level and a trend (slope)

: slope

State space form:

For simplicity, set .

An alpha smoothing parameter controls the exponential decay for the level, and a beta smoothing parameter controls the exponential decay for the slope

Each parameter ranges from 0 to 1, with larger values giving more weight to recent observations

- Two smoothing parameters and ().

- level: weighted average between and one-step ahead forecast for time , .

- slope: weighted average of and , current and previous estimate of slope.

- Choose to minimise SSE.

21.5.5 Holt and Winters model

- The Holt-Winters exponential smoothing approach can be used to fit a time series that has an overall level, a trend, and a seasonal component:

- : seasonal state.

21.5.6 Holt-Winters additive model

- integer part of .

- Parameters: , , and period of seasonality (e.g. for quarterly data).

21.5.7 Holt-Winters multiplicative method

- Holt-Winters multiplicative method with multiplicative errors for when seasonal variations are changing proportional to the level of the series.

- is integer part of .

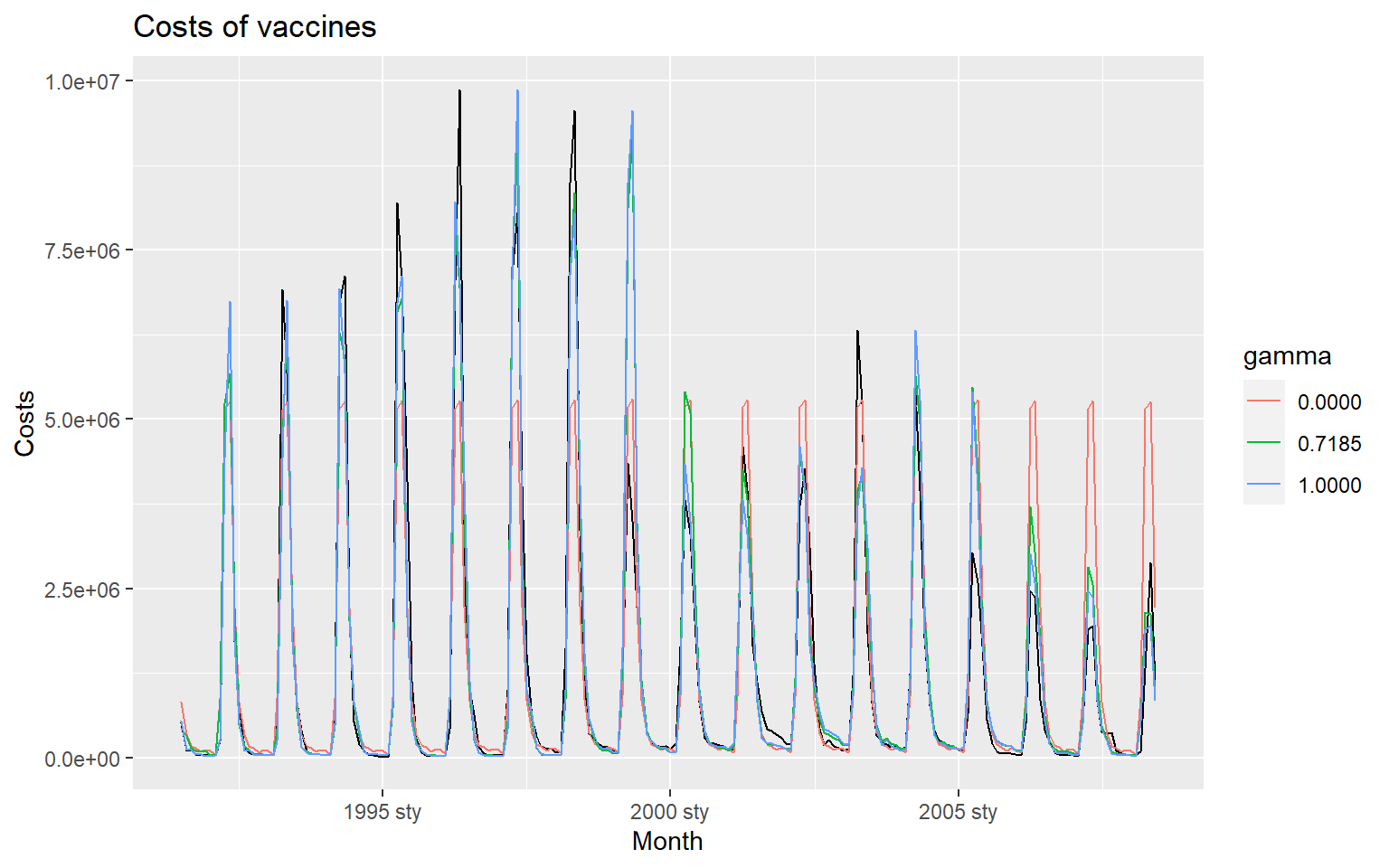

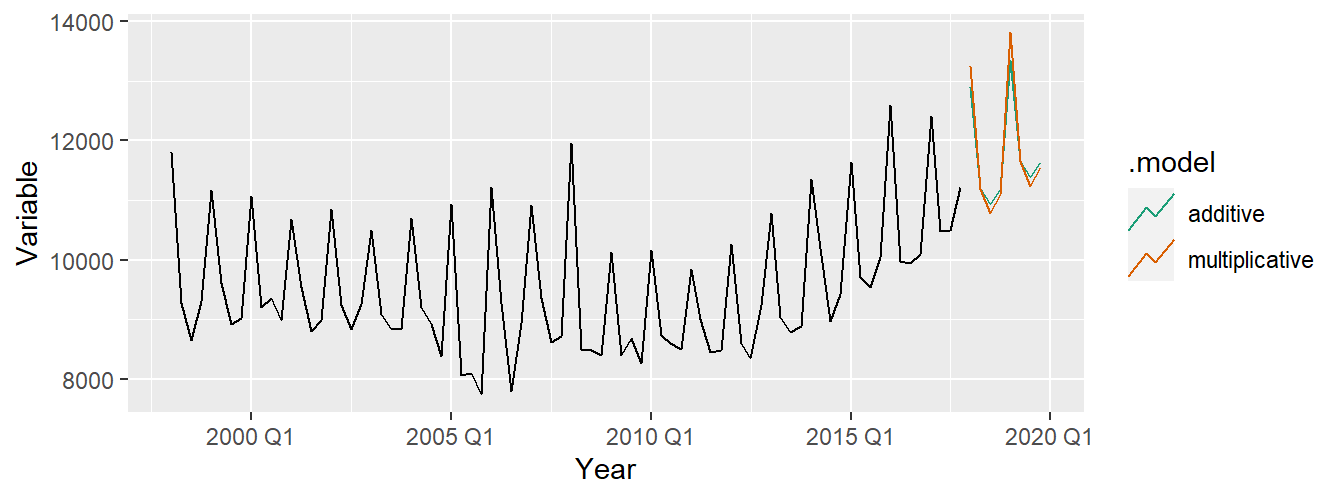

aus_holidays <- tourism %>% filter(Purpose == "Holiday") %>%

summarise(Trips = sum(Trips))

fit <- aus_holidays %>%

model(

additive = ETS(Trips ~ error("A") + trend("A") + season("A")),

multiplicative = ETS(Trips ~ error("M") + trend("A") + season("M"))

)

fc <- fit %>% forecast()fc %>%

autoplot(aus_holidays, level = NULL) + xlab("Year") +

ylab("Variable") +

scale_color_brewer(type = "qual", palette = "Dark2")

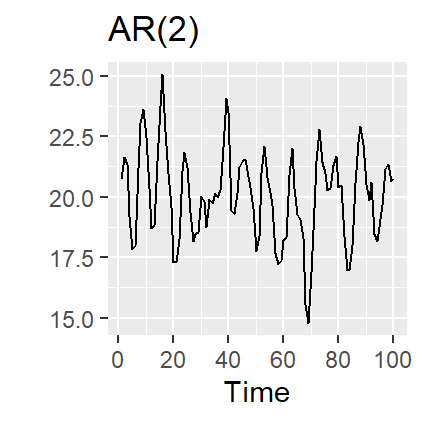

21.6 Autoregressive models

While exponential smoothing models are based on a description of the trend and seasonality in the data, ARIMA models aim to describe the autocorrelations in the data.

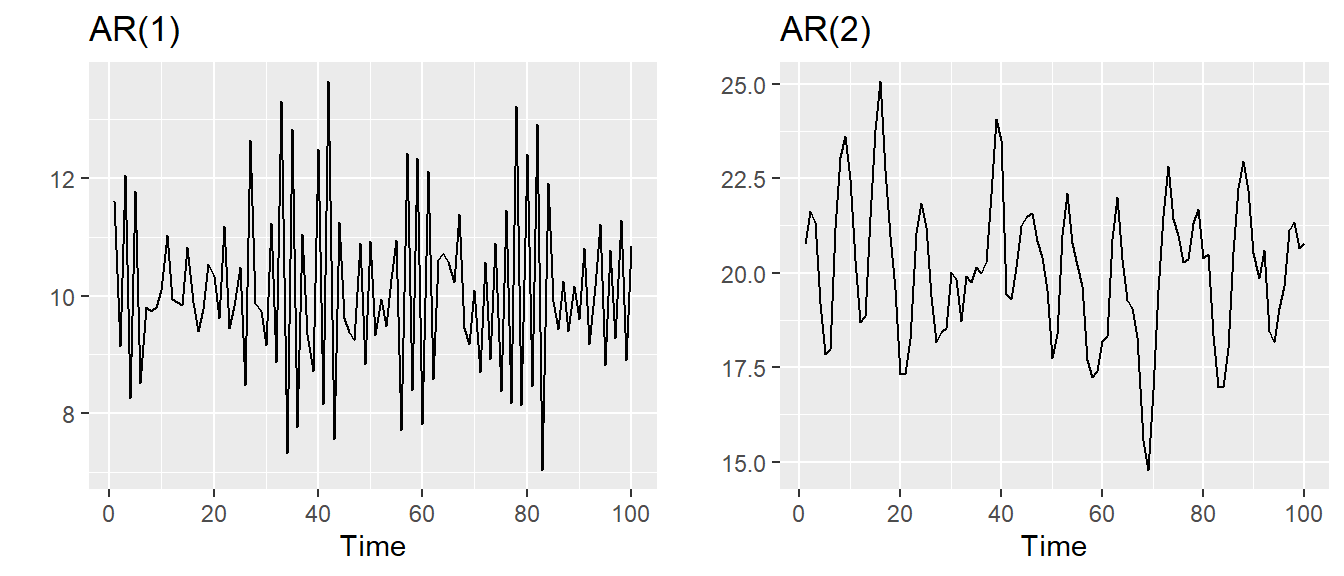

Autoregressive (AR) models:

where is white noise. This is a multiple regression with lagged values of as predictors. Autoregressive models are remarkably flexible at handling a wide range of different time series patterns.

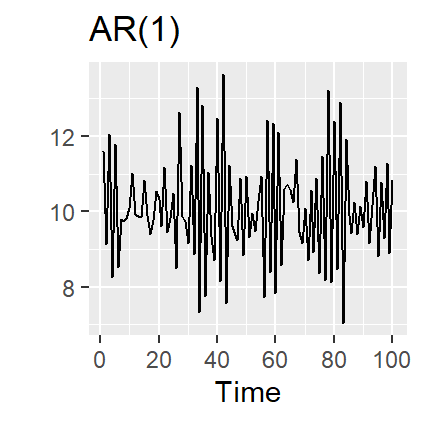

21.6.1 AR(1) model

,.

- When , is equivalent to White Noise

- When and , is equivalent to a Random Walk

- When and , is equivalent to a Random Walk with drift

- When , tends to oscillate between positive and negative values.

21.6.3 Stationarity conditions

We normally restrict autoregressive models to stationary data, and then some constraints on the values of the parameters are required.

General condition for stationarity: Complex roots of lie outside the unit circle on the complex plane.

- For : .

- For : .

- More complicated conditions hold for .

- Estimation software takes care of this.

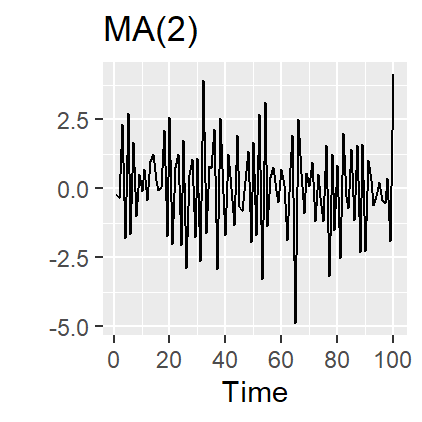

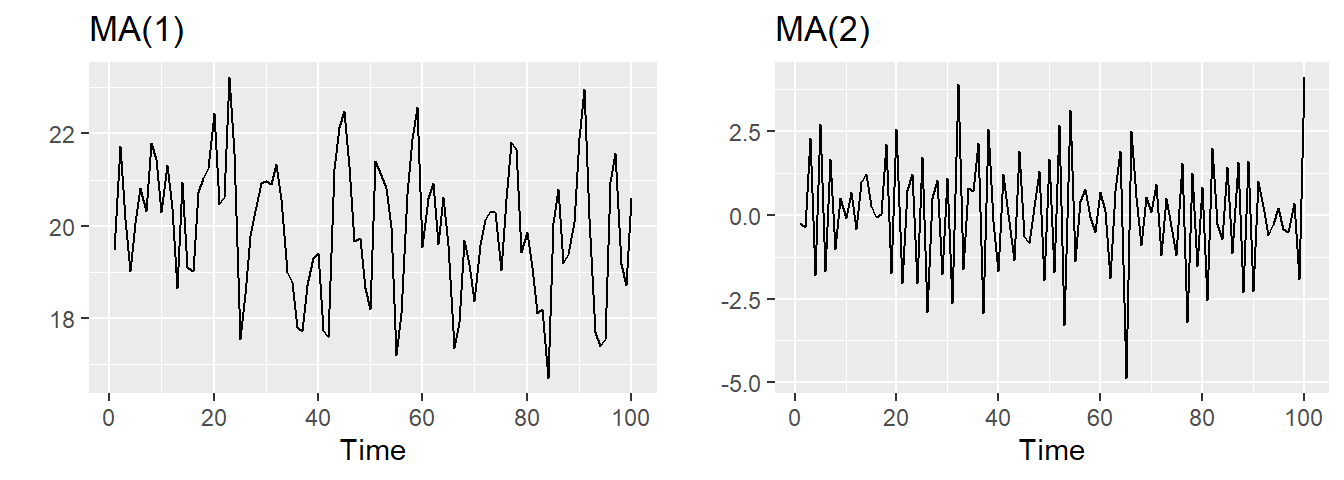

21.7 Moving Average (MA) models

Rather than using past values of the forecast variable in a regression, a moving average model uses past forecast errors in a regression-like model.

Moving Average (MA) models:

where is white noise.

This is a multiple regression with past errors as predictors. Don’t confuse this with moving average smoothing!

21.8 ARIMA models

AutoRegressive Moving Average models:

- Predictors include both lagged values of and lagged errors.

- Conditions on coefficients ensure stationarity.

- Conditions on coefficients ensure invertibility.

- Combined ARMA model with differencing.

ARIMA() model:

AR: order of the autoregressive part

I: degree of first differencing involved

MA: order of the moving average part

White noise model: ARIMA(0,0,0)

Random walk: ARIMA(0,1,0) with no constant

Random walk with drift: ARIMA(0,1,0) with

AR(): ARIMA(,0,0)

MA(): ARIMA(0,0,)

21.8.1 Exercise

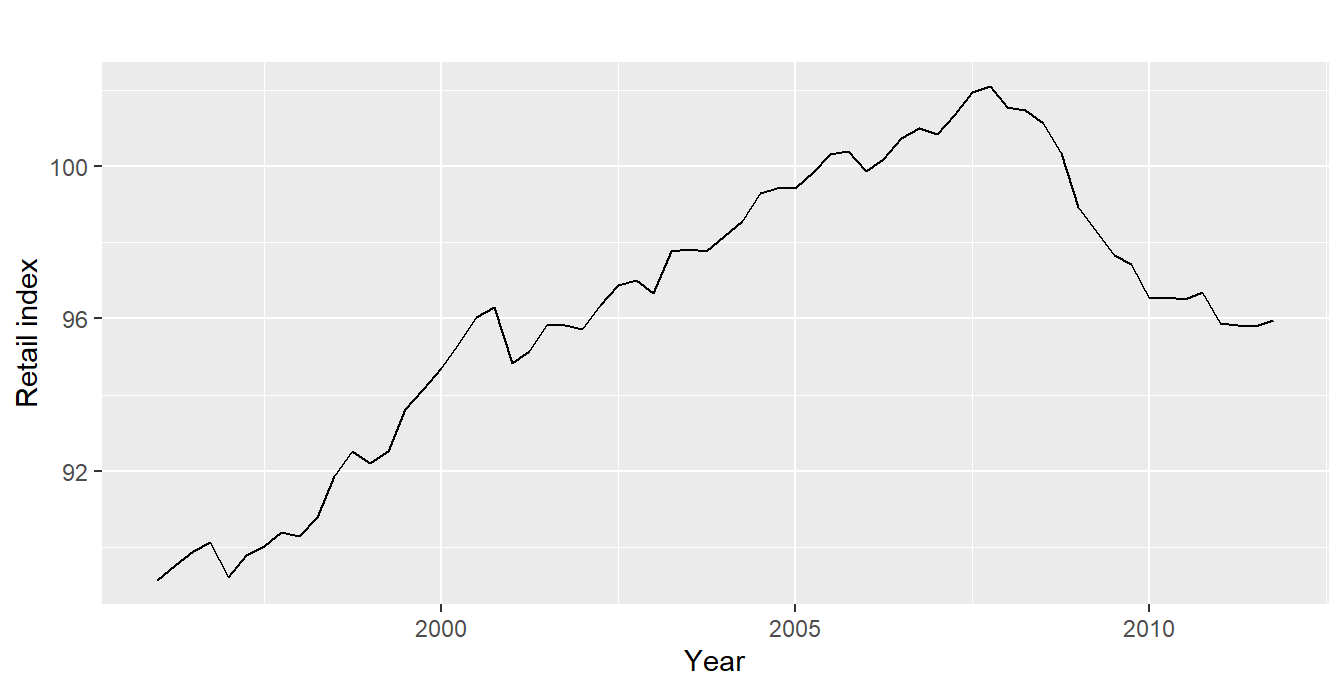

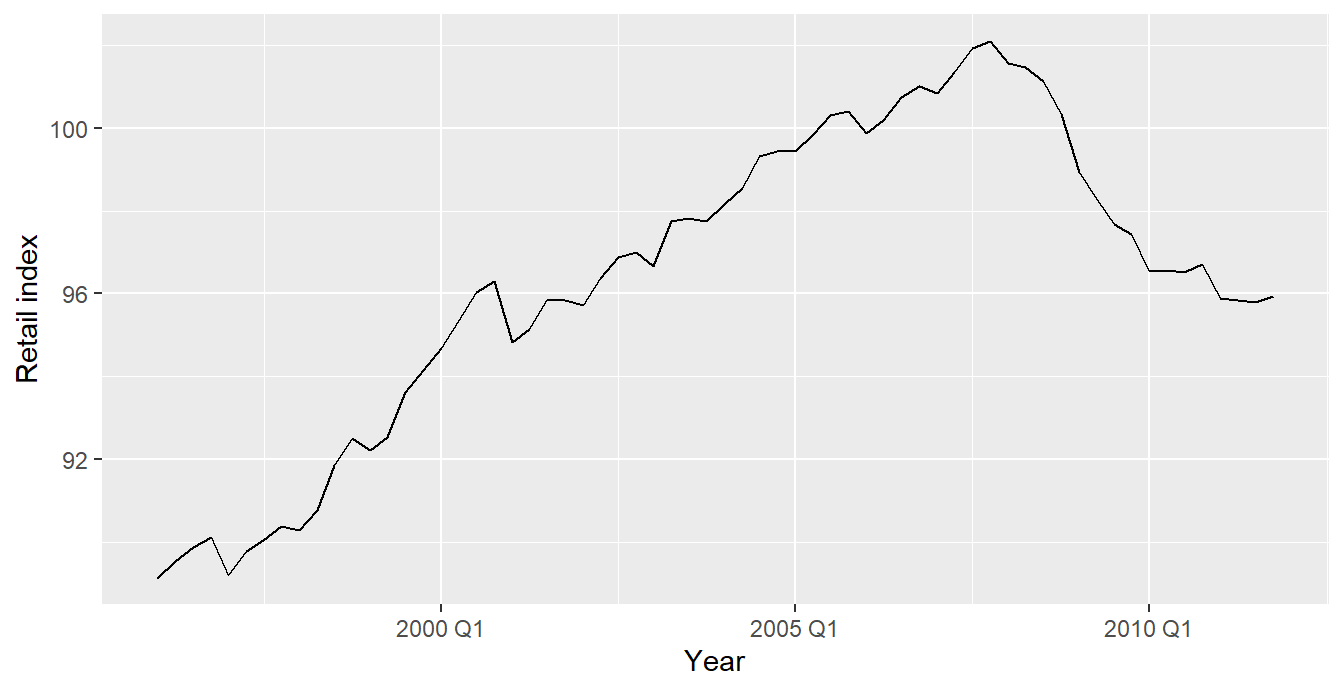

Now let`s focus on the retail trade.

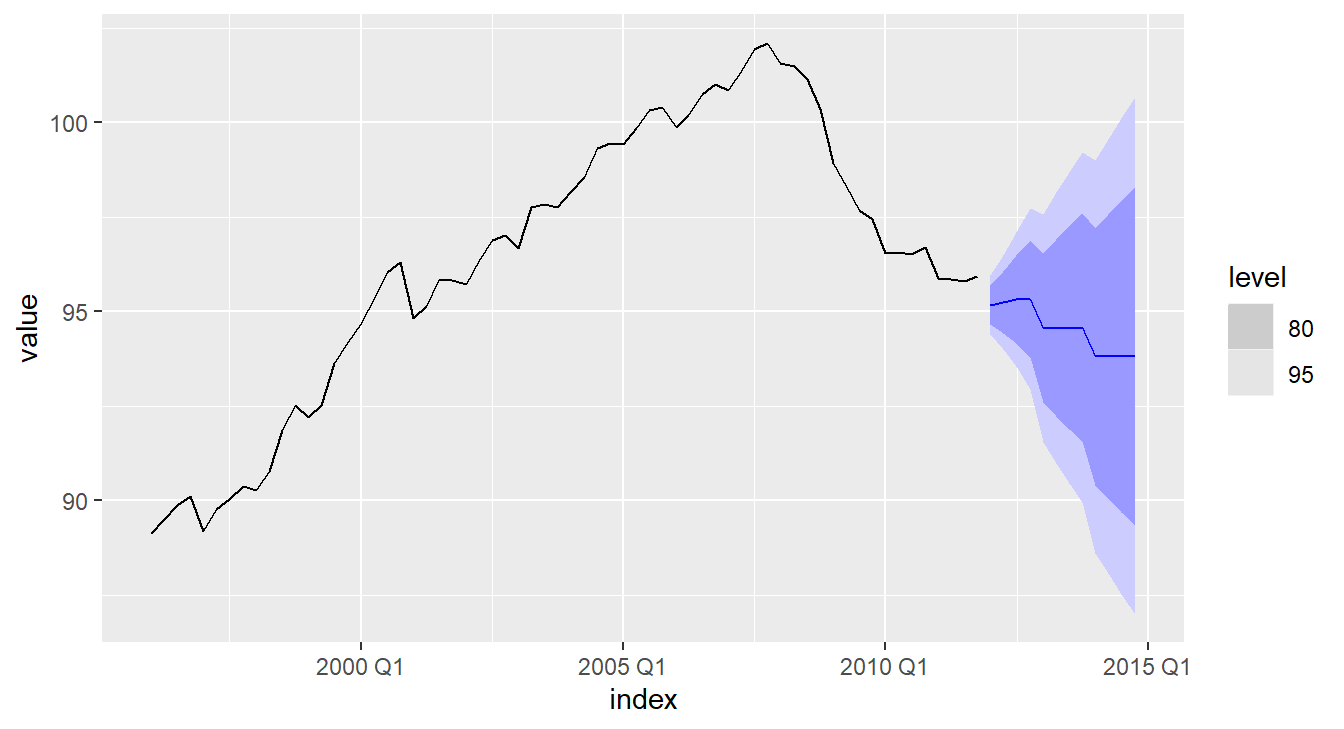

autoplot(euretail) +

xlab("Year") + ylab("Retail index")

We should probably use some transformations to stabilize the variance.

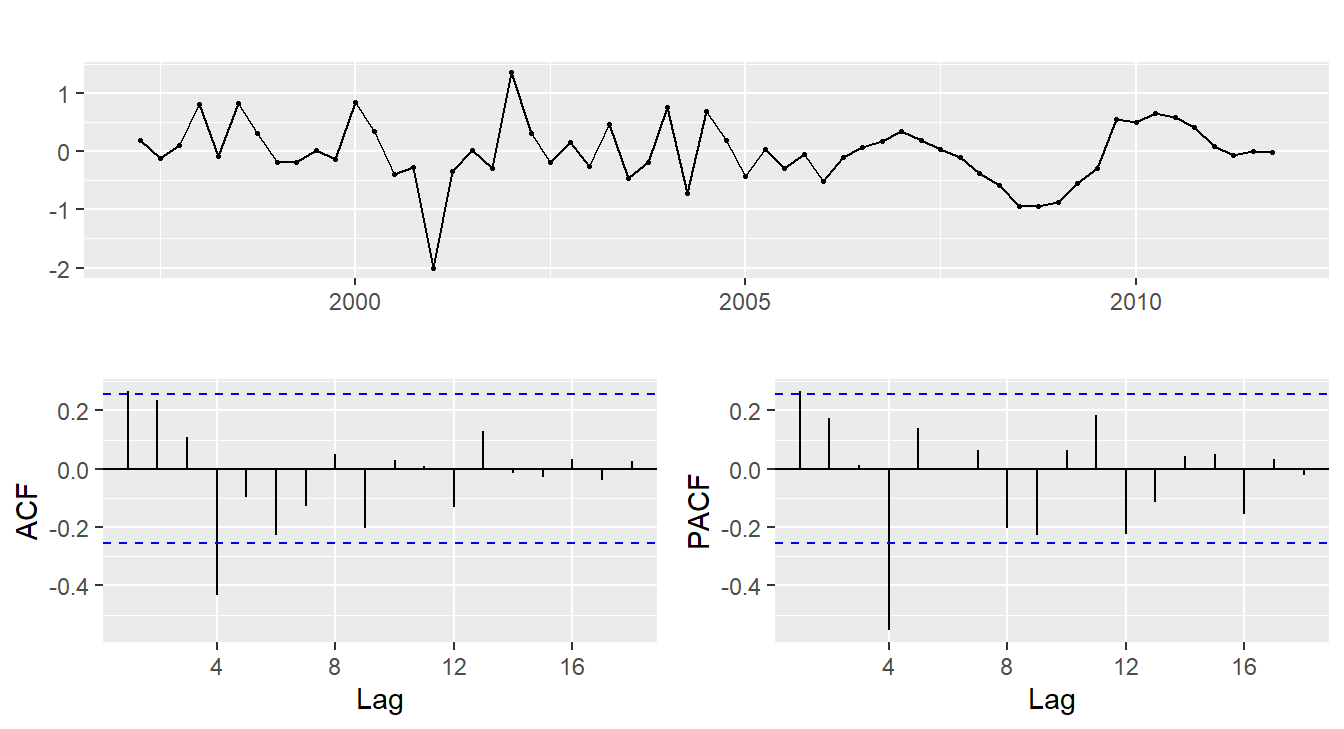

euretail %>% diff(lag=4) %>% diff() %>%

ggtsdisplay()

- and seems necessary.

- Significant spike at lag 1 in ACF suggests non-seasonal MA(1) component.

- Significant spike at lag 4 in ACF suggests seasonal MA(1) component.

- Initial candidate model: ARIMA(0,1,1)(0,1,1).

- We could also have started with ARIMA(1,1,0)(1,1,0).

fit <- Arima(euretail, order=c(0,1,1),

seasonal=c(0,1,1))

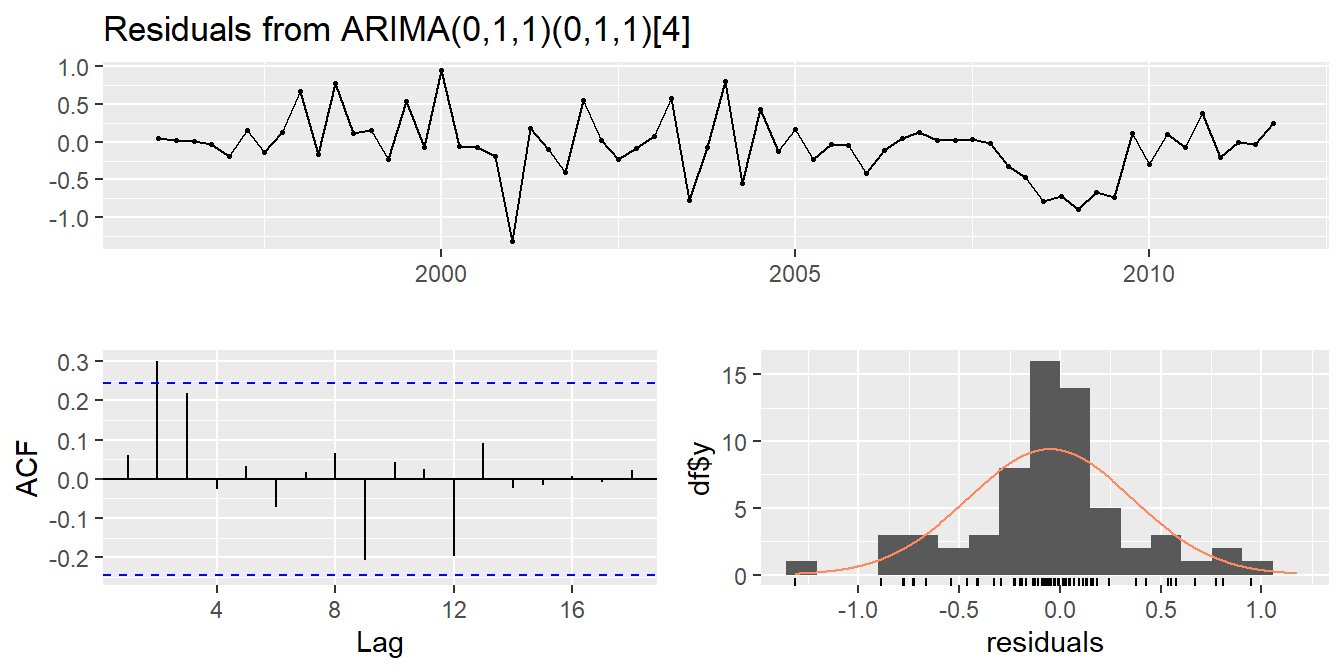

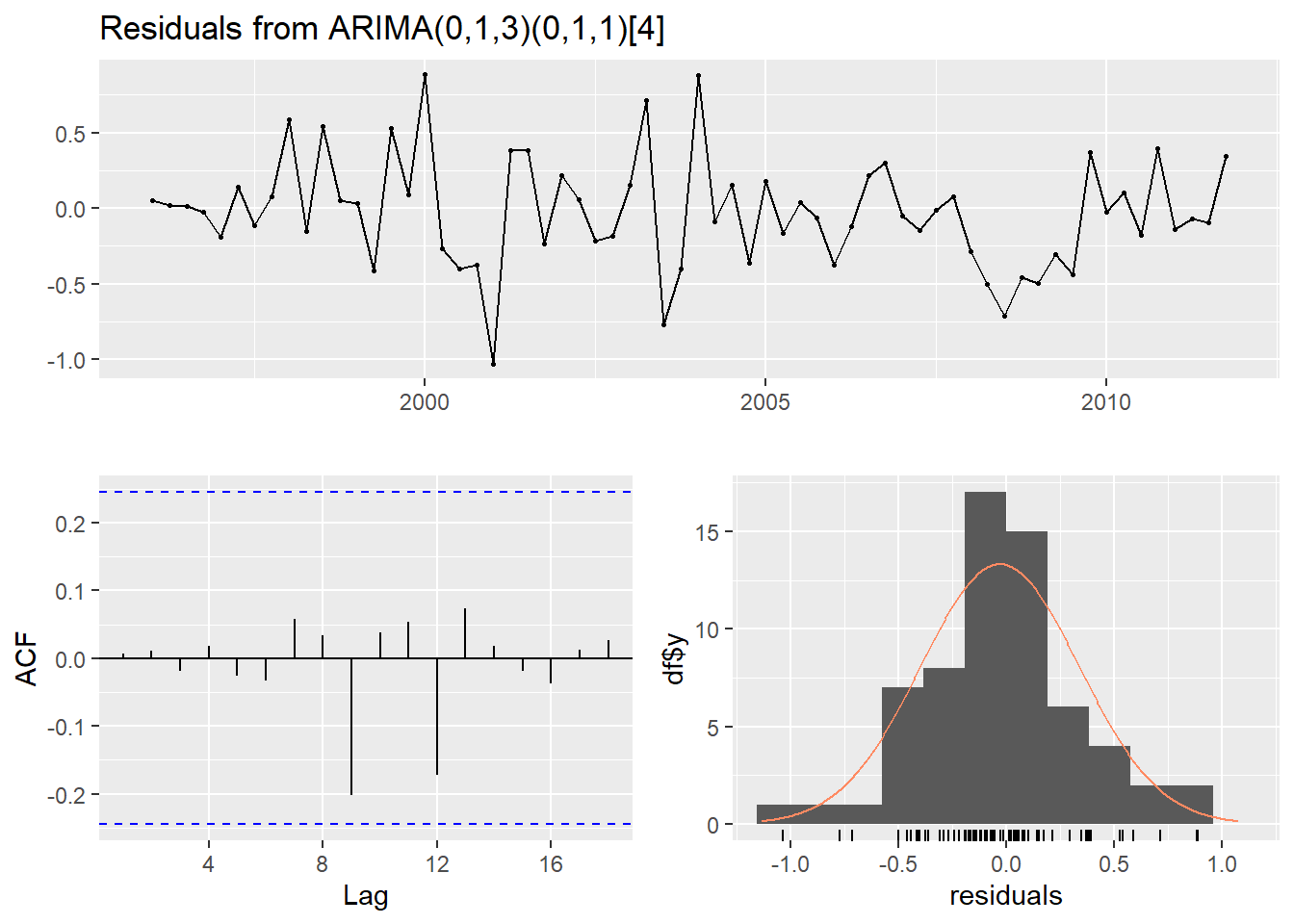

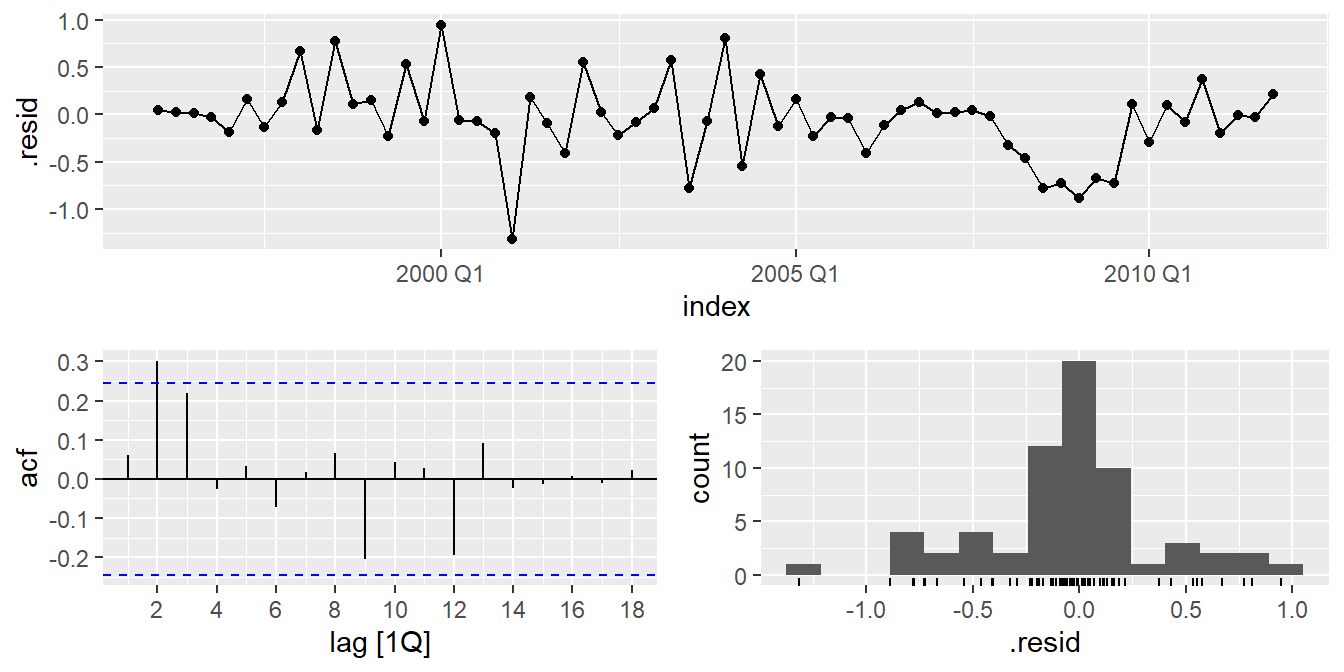

checkresiduals(fit)

##

## Ljung-Box test

##

## data: Residuals from ARIMA(0,1,1)(0,1,1)[4]

## Q* = 11, df = 6, p-value = 0.1

##

## Model df: 2. Total lags used: 8##

## Ljung-Box test

##

## data: Residuals from ARIMA(0,1,1)(0,1,1)[4]

## Q* = 11, df = 6, p-value = 0.1

##

## Model df: 2. Total lags used: 8- ACF and PACF of residuals show significant spikes at lag 2, and maybe lag 3.

- AICc of ARIMA(0,1,2)(0,1,1) model is 74.36.

- AICc of ARIMA(0,1,3)(0,1,1) model is 68.53.

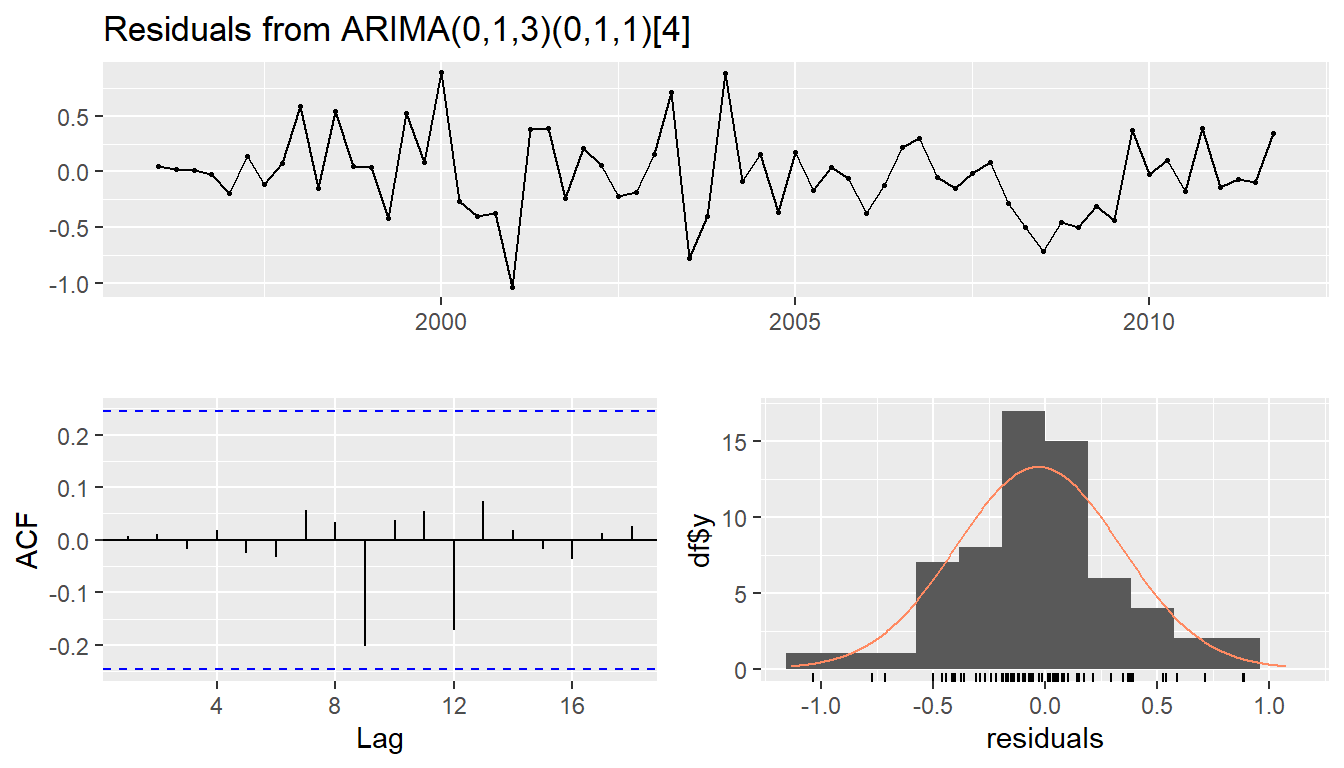

fit <- Arima(euretail, order=c(0,1,3),

seasonal=c(0,1,1))

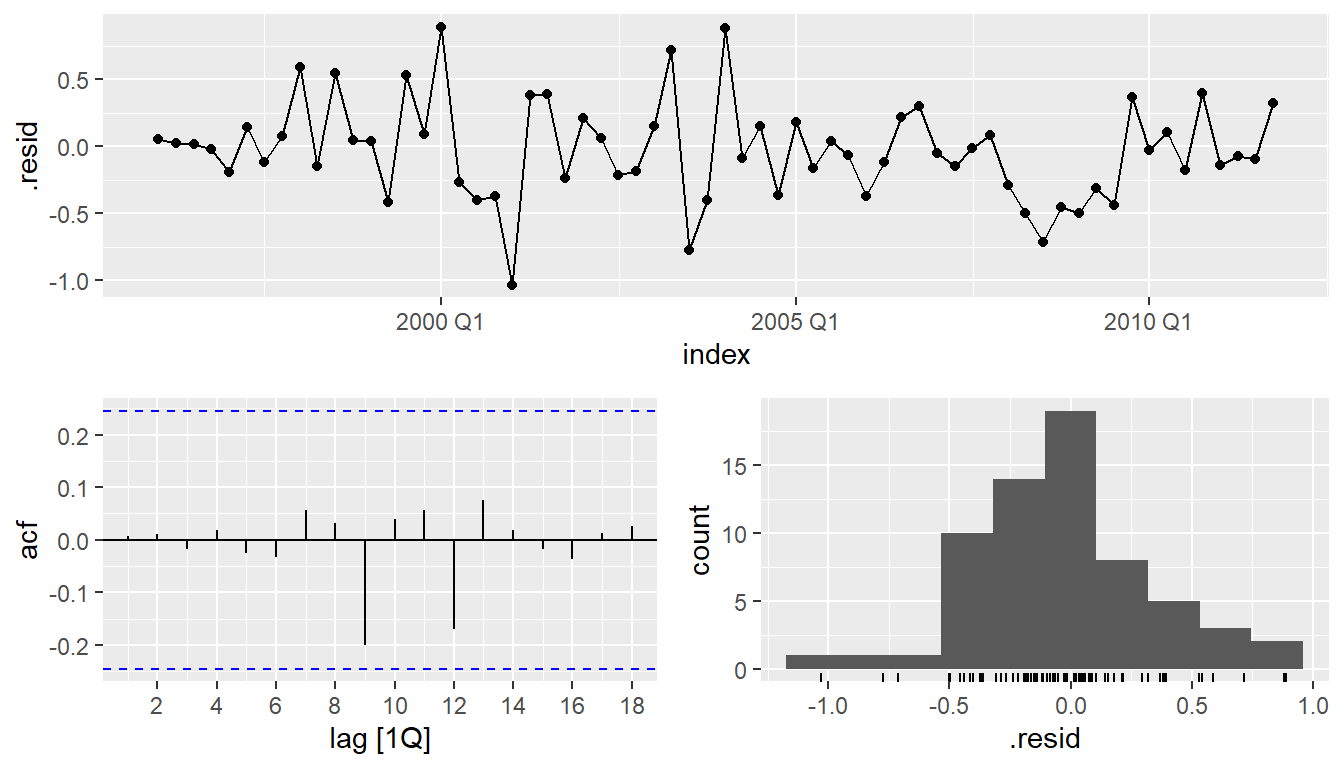

checkresiduals(fit)

##

## Ljung-Box test

##

## data: Residuals from ARIMA(0,1,3)(0,1,1)[4]

## Q* = 0.51, df = 4, p-value = 1

##

## Model df: 4. Total lags used: 8(fit <- Arima(euretail, order=c(0,1,3),

seasonal=c(0,1,1)))## Series: euretail

## ARIMA(0,1,3)(0,1,1)[4]

##

## Coefficients:

## ma1 ma2 ma3 sma1

## 0.263 0.370 0.419 -0.661

## s.e. 0.124 0.126 0.130 0.156

##

## sigma^2 = 0.156: log likelihood = -28.7

## AIC=67.4 AICc=68.53 BIC=77.78checkresiduals(fit)

##

## Ljung-Box test

##

## data: Residuals from ARIMA(0,1,3)(0,1,1)[4]

## Q* = 0.51, df = 4, p-value = 1

##

## Model df: 4. Total lags used: 8checkresiduals(fit, plot=FALSE)##

## Ljung-Box test

##

## data: Residuals from ARIMA(0,1,3)(0,1,1)[4]

## Q* = 0.51, df = 4, p-value = 1

##

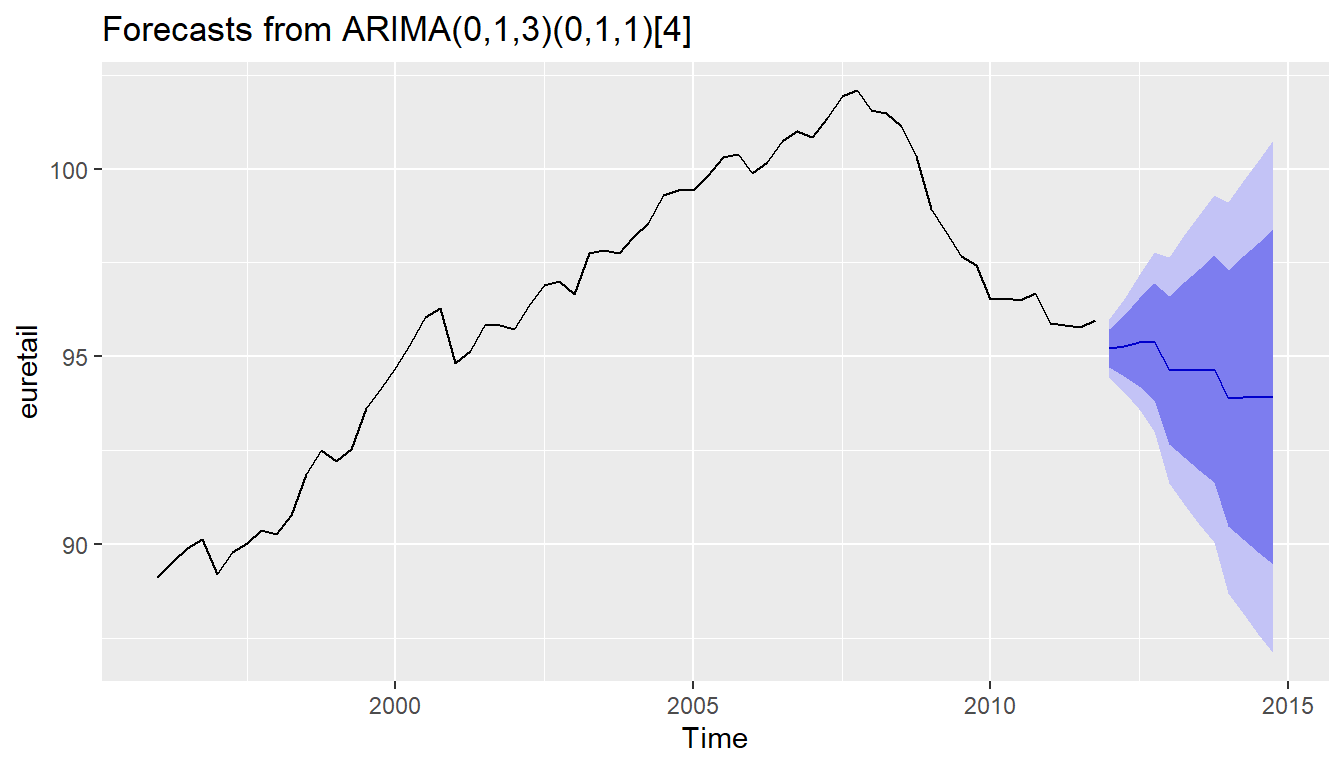

## Model df: 4. Total lags used: 8autoplot(forecast(fit, h=12))

auto.arima(euretail)## Series: euretail

## ARIMA(0,1,3)(0,1,1)[4]

##

## Coefficients:

## ma1 ma2 ma3 sma1

## 0.263 0.370 0.419 -0.661

## s.e. 0.124 0.126 0.130 0.156

##

## sigma^2 = 0.156: log likelihood = -28.7

## AIC=67.4 AICc=68.53 BIC=77.78auto.arima(euretail,

stepwise=FALSE, approximation=FALSE)## Series: euretail

## ARIMA(0,1,3)(0,1,1)[4]

##

## Coefficients:

## ma1 ma2 ma3 sma1

## 0.263 0.370 0.419 -0.661

## s.e. 0.124 0.126 0.130 0.156

##

## sigma^2 = 0.156: log likelihood = -28.7

## AIC=67.4 AICc=68.53 BIC=77.7821.9 Seasonal ARIMA models

A seasonal ARIMA model is formed by including additional seasonal terms in the ARIMA models we have seen so far. The seasonal part of the model consists of terms that are similar to the non-seasonal components of the model, but involve backshifts of the seasonal period.

The modelling procedure is almost the same as for non-seasonal data, except that we need to select seasonal AR and MA terms as well as the non-seasonal components of the model!

| ARIMA | ||

|---|---|---|

| Non-seasonal part | Seasonal part of | |

| of the model | of the model |

where number of observations per year.

E.g., ARIMA model (without constant)

All the factors can be multiplied out and the general model written as follows:

21.9.1 Common ARIMA models

The Census Bureaus (in many countries) use the following models most often:

ARIMA(0,1,1)(0,1,1) with log transformation

ARIMA(0,1,2)(0,1,1) with log transformation

ARIMA(2,1,0)(0,1,1) with log transformation

ARIMA(0,2,2)(0,1,1) with log transformation

ARIMA(2,1,2)(0,1,1) with no transformation

The seasonal part of an AR or MA model will be seen in the seasonal lags of the PACF and ACF.

ARIMA(0,0,0)(0,0,1) will show:

- a spike at lag 12 in the ACF but no other significant spikes.

- The PACF will show exponential decay in the seasonal lags; that is, at lags 12, 24, 36, etc.

ARIMA(0,0,0)(1,0,0) will show:

- exponential decay in the seasonal lags of the ACF

- a single significant spike at lag 12 in the PACF.

21.9.2 Exercise

Lets continue the analysis on the quarterly retail trade:

eu_retail <- as_tsibble(fpp2::euretail)

eu_retail %>% autoplot(value) +

xlab("Year") + ylab("Retail index")

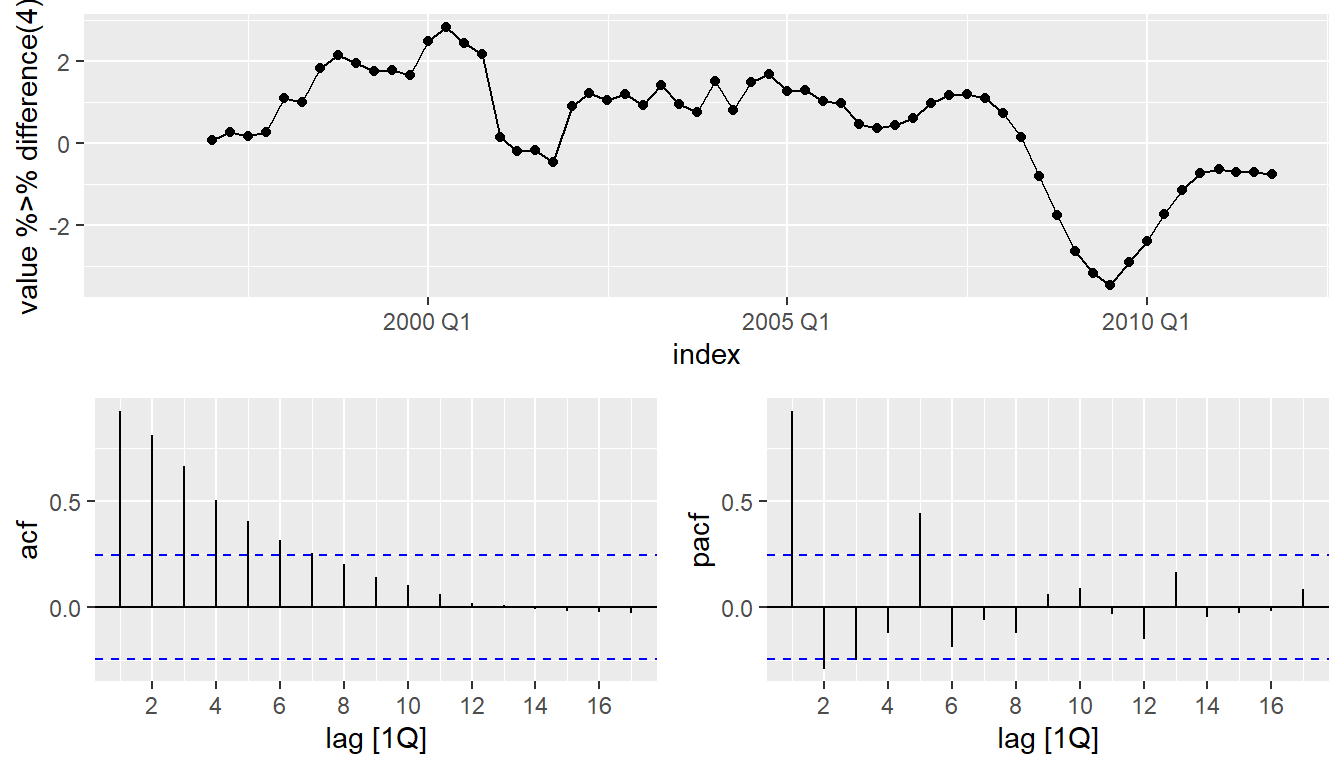

eu_retail %>% gg_tsdisplay(

value %>% difference(4), plot_type='partial')

eu_retail %>% gg_tsdisplay(

value %>% difference(4) %>% difference(1),plot_type='partial')

- and seems necessary.

- Significant spike at lag 1 in ACF suggests non-seasonal MA(1) component.

- Significant spike at lag 4 in ACF suggests seasonal MA(1) component.

- Initial candidate model: ARIMA(0,1,1)(0,1,1).

- We could also have started with ARIMA(1,1,0)(1,1,0).

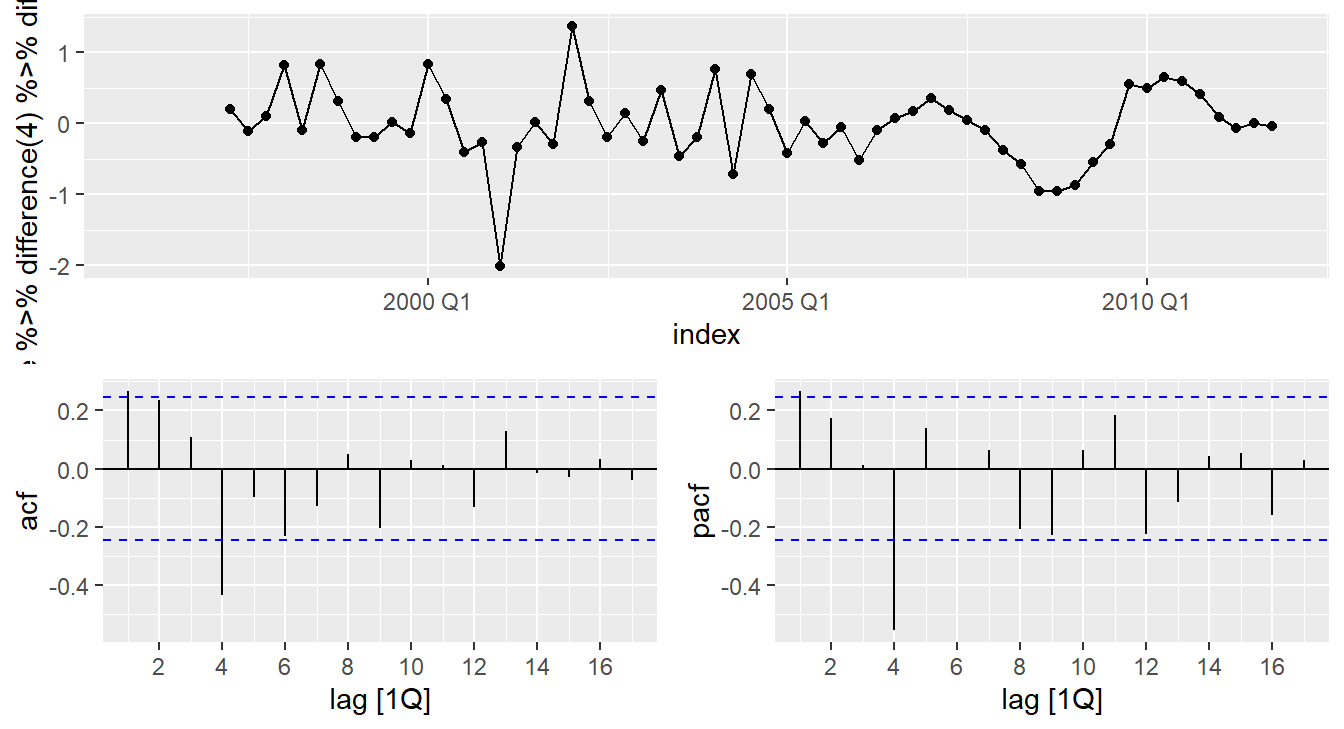

fit <- eu_retail %>%

model(arima = ARIMA(value ~ pdq(0,1,1) + PDQ(0,1,1)))

augment(fit) %>% gg_tsdisplay(.resid, plot_type = "hist")

augment(fit) %>%

features(.resid, ljung_box, lag = 8, dof = 2)## # A tibble: 1 × 3

## .model lb_stat lb_pvalue

## <chr> <dbl> <dbl>

## 1 arima 10.7 0.0997aicc <- eu_retail %>%

model(

mdl_1 = ARIMA(value ~ pdq(0,1,1) + PDQ(0,1,1)),

mdl_2 = ARIMA(value ~ pdq(0,1,2) + PDQ(0,1,1)),

mdl_3 = ARIMA(value ~ pdq(0,1,3) + PDQ(0,1,1)),

mdl_4 = ARIMA(value ~ pdq(0,1,4) + PDQ(0,1,1))

) %>%

glance %>%

pull(AICc)- ACF and PACF of residuals show significant spikes at lag 2, and maybe lag 3.

- AICc of ARIMA(0,1,1)(0,1,1) model is 75.72

- AICc of ARIMA(0,1,2)(0,1,1) model is 74.27.

- AICc of ARIMA(0,1,3)(0,1,1) model is 68.39.

- AICc of ARIMA(0,1,4)(0,1,1) model is 70.73.

fit <- eu_retail %>%

model(

arima013011 = ARIMA(value ~ pdq(0,1,3) + PDQ(0,1,1))

)

report(fit)## Series: value

## Model: ARIMA(0,1,3)(0,1,1)[4]

##

## Coefficients:

## ma1 ma2 ma3 sma1

## 0.2630 0.3694 0.4200 -0.6636

## s.e. 0.1237 0.1255 0.1294 0.1545

##

## sigma^2 estimated as 0.156: log likelihood=-28.63

## AIC=67.26 AICc=68.39 BIC=77.65augment(fit) %>%

gg_tsdisplay(.resid, plot_type = "hist")

augment(fit) %>%

features(.resid, ljung_box, lag = 8, dof = 4)## # A tibble: 1 × 3

## .model lb_stat lb_pvalue

## <chr> <dbl> <dbl>

## 1 arima013011 0.511 0.972fit %>% forecast(h = "3 years") %>%

autoplot(eu_retail)

eu_retail %>% model(ARIMA(value)) %>% report()## Series: value

## Model: ARIMA(0,1,3)(0,1,1)[4]

##

## Coefficients:

## ma1 ma2 ma3 sma1

## 0.2630 0.3694 0.4200 -0.6636

## s.e. 0.1237 0.1255 0.1294 0.1545

##

## sigma^2 estimated as 0.156: log likelihood=-28.63

## AIC=67.26 AICc=68.39 BIC=77.65eu_retail %>% model(ARIMA(value, stepwise = FALSE,

approximation = FALSE)) %>% report()## Series: value

## Model: ARIMA(0,1,3)(0,1,1)[4]

##

## Coefficients:

## ma1 ma2 ma3 sma1

## 0.2630 0.3694 0.4200 -0.6636

## s.e. 0.1237 0.1255 0.1294 0.1545

##

## sigma^2 estimated as 0.156: log likelihood=-28.63

## AIC=67.26 AICc=68.39 BIC=77.65